|

Yanming Xiu (修彦名) Greetings! I am currently a 3rd year Ph.D. candidate at Intelligent Interactive Internet of Things (I^3T) Lab , Department of Electrical and Computer Engineering, Duke University in Durham, NC, where I work on computer vision, deep learning and medical imaging. My Advisor is Dr. Maria Gorlatova. Prior to coming to Duke, I earned my B.Eng. in Automation and a honor undergraduate degree at Zhejiang University in 2022. I also worked as a research assistant at the University of Hong Kong in 2021.

CV / Email / Github / Linkedin / Google Scholar |

|

Research InterestsI'm interested in machine learning and its application in computer vision, especially Augmented Reality (AR) and Virtual Reality (VR). Currently I am also interested in generative AI and its application. My current research is mainly focused on: (1) addressing user safety issues in AR experiences by modeling and detecting detrimental virtual content; (2) assessing virtual content quality by using automated systems leveraging generative AI. |

Experiences

|

Education

|

Honors

|

Publications (*Equal Contributions)

2025

2024

|

Other ProjectsSome of my previous projects are listed here: |

|

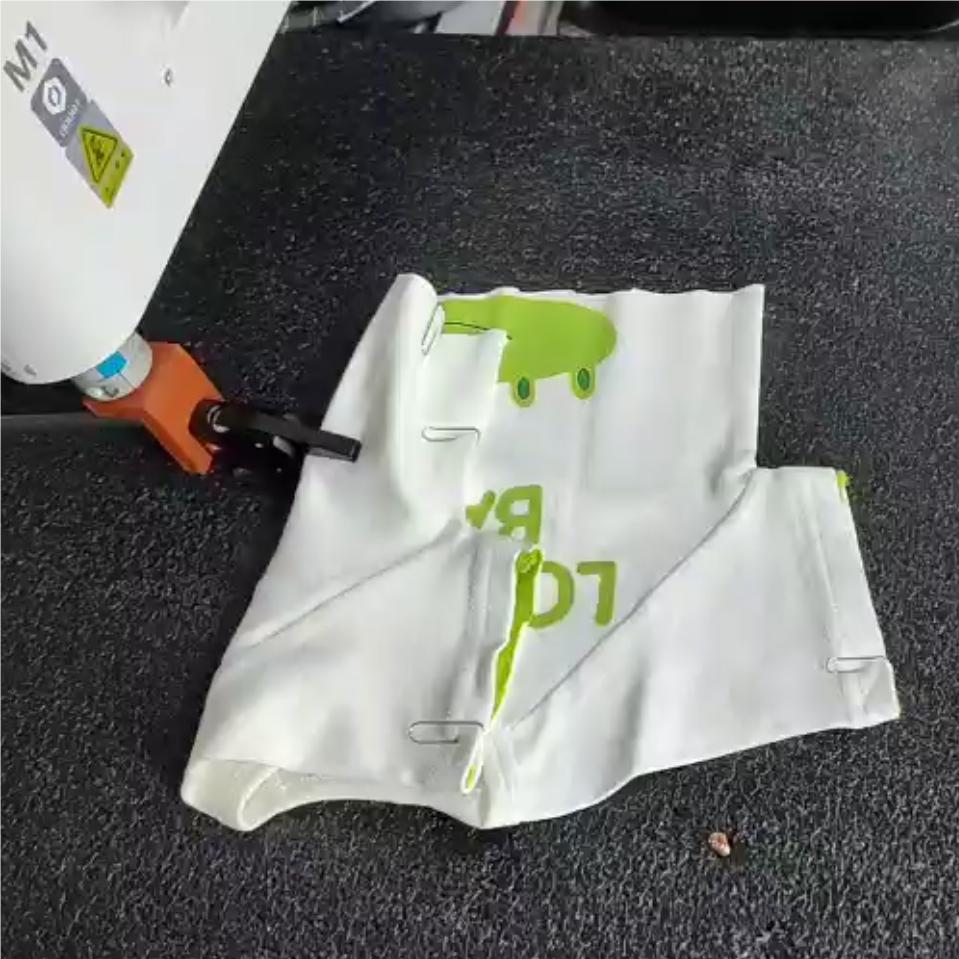

Video This is a joint project co-advised by Prof. Wenping Wang from HKU and Prof. Yiping Feng from ZJU. The project include 3 parts: 1) clothes landmark detection through HRNet, 2) Robotic arm path planning and 3) Overall system construction. The camera takes an image of clothes and pass it to my fork version of HRNet . Then the HRNet predict the key points of the clothes and pass them to robot control script. Finally, the arm execute the folding process. |

|

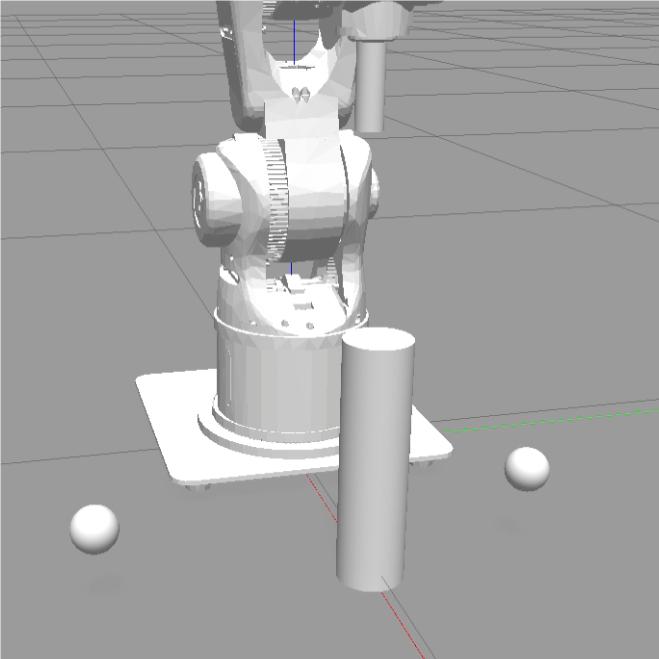

Video This is a competition held by ZJU CSE. The participants are required to control the robot arm and hit the bells for as much times as possible within 1 minute, while obstacle avoidance is also required. I loaded the robot model in Gazebo, implemented the torque control algorithm and tested it in real-world robot. |

|

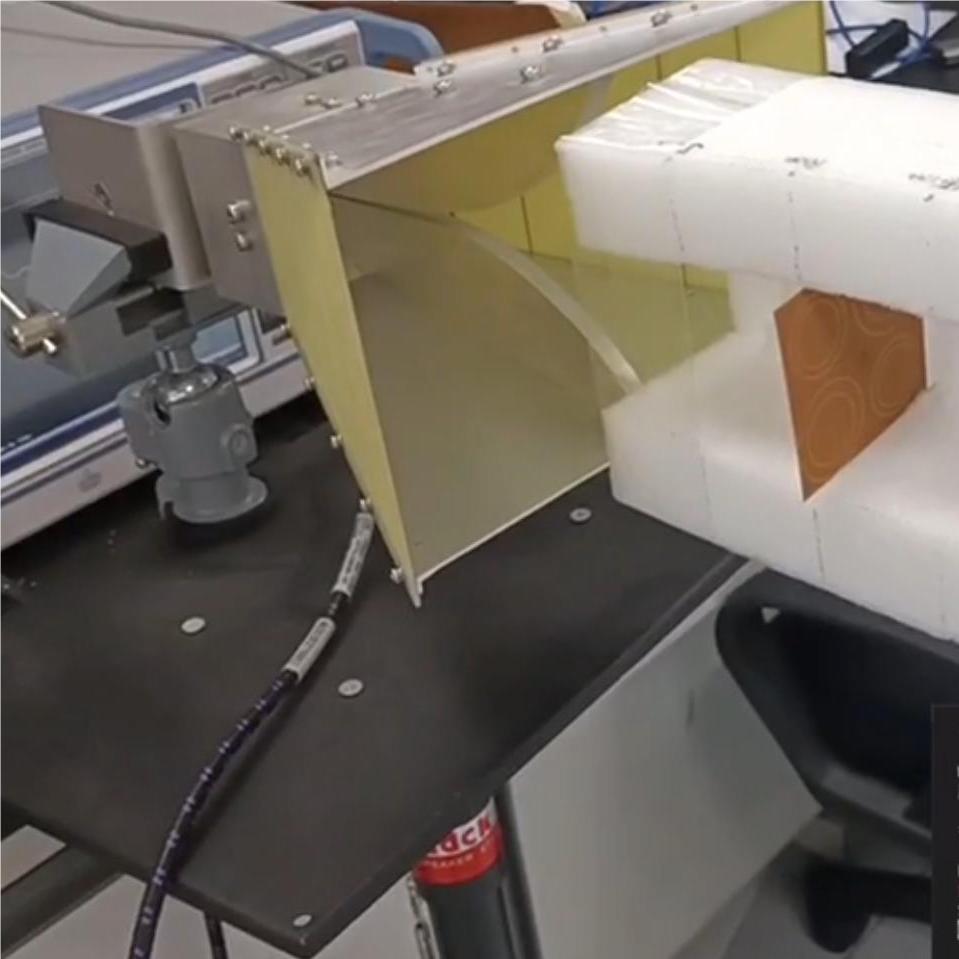

Video / Slides (in Chinese) This is a training program in 2020, in which I designed a new type of chipless RFID tag so that the production cost of tags can be reduced. The tag can be correctly detected by the RFID reader at around 5-80 cm, which are common detection distances for warehouse logistics tasks. |